Blame Bad Incentives for Bad Science

Scientists have to publish a constant stream of new results to succeed. But in the process, their success may lead to science’s failure, two new studies warn.

Send us a link

Scientists have to publish a constant stream of new results to succeed. But in the process, their success may lead to science’s failure, two new studies warn.

How easy is it really to exactly replicate a scientific experiment by just reading the published result?

The Ref star system encourages novelty but offers no incentive to replicate studies – and that’s exactly what scientists need to do to be more sure of our claims.

BioBlocks is an open-source web-based visual development environment for describing and execute experimental protocols on local robotic platforms or remotely i.e. in the cloud. It aims to serve as a 'de facto' open standard for programming protocols in Biology.

P hacking is manipulating data and research methods to achieve statistical signifiance. And it could be why so many research papers are false.

Failing to record the version of any piece of software or hardware, overlooking a single parameter, or glossing over a restriction on how to use another researcher's code can lead you astray.

Science's quality control processes are under question. Scientists should think about changing the rules and extending their peer communities.

Researchers with a PhD who are employed by a Dutch research institution can request funding for the replication of 'cornerstone research'.

Scientists incentivised to publish surprising results frequently in major journals, despite risk that such findings are likely to be wrong, suggests research.

The solution to science's replication crisis is a new ecosystem in which scientists sell what they learn from their research.

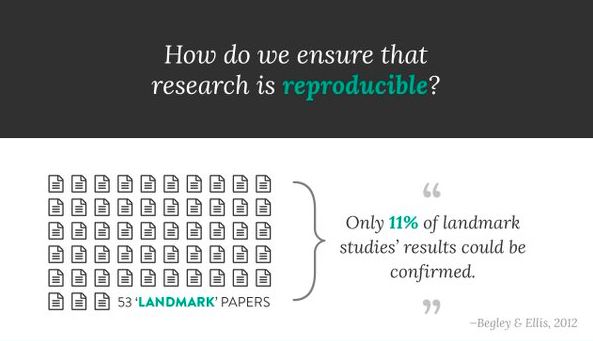

Recap of contest launched by the Winnower and the Laura and John Arnold Foundation to answer the question – How do we ensure that research is reproducible?

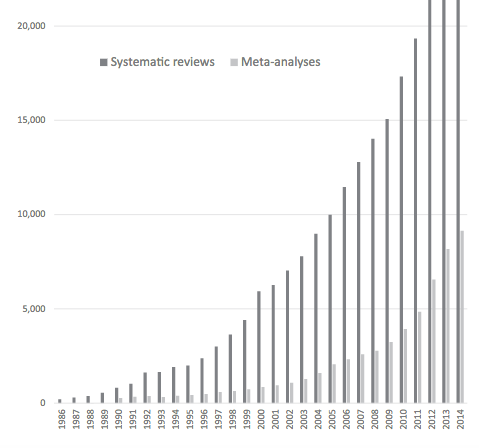

John P.A. Ioannidis argues that the production of systematic reviews and meta-analyses has reached epidemic proportions.

It's easy to misrepresent the findings from brain scan studies. Just ask a dead salmon.

Reproducible, transparent and reliable science.

Research creates its own problems. Articles may be withdrawn because of irregularities, results can be impossible to reproduce, methods are often non-standardised, and publications may not be accessible. The search is now on for solutions.

The replication crisis in science is largely attributable to a mismatch in our expectations of how often findings should replicate and how difficult it is to actually discover true findings in certain fields.

To make replication studies more useful, researchers must make more of them, funders must encourage them and journals must publish them.

As failures to replicate results using the CRISPR alternative stack up, a quiet scientist stands by his claims.

Approximately one-fifth of papers with supplementary Excel gene lists contain erroneous gene name conversions.

All software used for the analysis should be either carefully documented or, better yet, openly shared and directly accessible to others.

Science isn’t self-correcting, it’s self-destructing. To save the enterprise, scientists must come out of the lab and into the real world.

On The Natural Selection of Bad Science.

The zoo of bacteria and viruses within each lab animal may be confounding experiments.

Sanjay Srivastava’s assessment of the state of psychology mixes a certain four-letter word and gallows humor with a desire to raise awareness of important research issues in his field.

Not just a competition but a resource that will continue to be useful now and in the future as we tackle improving reproducibility in the sciences.

Stephanie Wykstra Ivan Oransky Stuart Buck Brian Nosek Julia Galef (Moderator) How can we produce research findings that are both useful and robust?

Researchers have created a new system to test influential papers for reproducibility.