The Replication Paradox

Will integrating original studies and published replications always improve the reliability of your results? No! Replication studies suffer from the same publication bias as original studies.

Send us a link

Will integrating original studies and published replications always improve the reliability of your results? No! Replication studies suffer from the same publication bias as original studies.

A nonprofit's effort to replicate 50 top cancer papers is shaking up labs.

Science posted the most comprehensive [3]guidelines for the publication of studies in basic science to date, calling for the adoption of clearly defined rules on the sharing of data and methods.

Study calculates cost of flawed biomedical research in the US.

Researchers face pressure to hype and report selectively, says Dorothy Bishop.

Initiative trying to validate 50 cancer papers finds difficulty in accessing original study data.

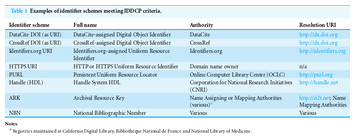

The paper proposes how to achieve widespread, uniform human and machine accessibility of deposited data, in support of significantly improved verification, validation, reproducibility and re-use of scholarly/scientific data.

Special Issue on reproducibility in EuroScientist.

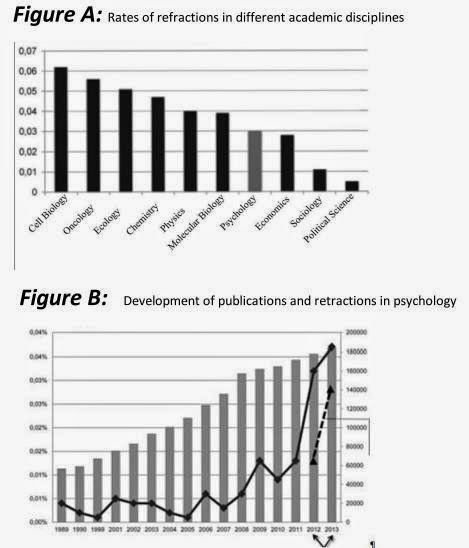

An ambitious [11]effort to replicate 100 research findings in psychology ended last week - and the data look worrying.

In recent years science has entered a crisis of trust. The results of many scientific experiments appear to be surprisingly hard to reproduce, while mistakes have highlighted flaws in the peer review system.

Psychology has been home to some of the most infamous cases of fraud in recent years, and while it's just a few bad apples who are spoiling the bunch, the field itself has seen an overall increase in retractions.

The 4th World Conference on Research Integrity will take place in Brazil in June 2015 sponsored by the Wellcome Trust, AAAS, NPG, EMBO and others.

A workshop held by the National Research Council in the US addressed statistical challenges in assessing and fostering the reproducibility of scientific results by examining the extent of reproducibility, the causes of reproducibility failures, and potential remedies. Here's the program.

Real scientific controversies are self-correcting shows the BICEP2 and Planck example.

An interview with John Ioannidis, co-director of the Meta-Research Innovation Center at Stanford.

Reproducibility alone is insufficient to address the replication crisis because even a reproducible analysis can suffer from many problems that threaten the validity and useful interpretation of the results.

The truth can be hard to find with millions of data points and lots of room for error.

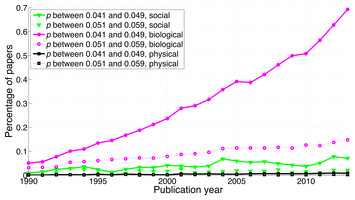

Study on the abundance of positive results in the scientific literature.

We propose to use the R-factor, a metric that indicates whether a report or its conclusions have been verified.

We propose steps to help increase the transparency of the scientific method and the reproducibility of research results: specifically, we introduce a peer-review oath and accompanying manifesto.

A guide to the popular, free statistics and visualization software that gives scientists control of their own data analysis.

This article describes a systematic analysis of the relationship between empirical data and theoretical conclusions for a set of experimental psychology articles published in the journal Science between 2005-2012.

The current incentive structure often leads to dead-end studies-but there are ways to fix the problem.

Independent replication of studies before publication may reveal sources of unreliable results.

Consensus on reporting principles aims to improve quality control in biomedical research and encourage public trust in science

With the increasing complexities of new technologies and techniques, coupled with the specialisation of experiments, reproducing research findings has become a growing challenge.

Research Practices that May Help Increase the Proportion of True Research Findings

A new way to measure whether an experimental result is really replicated.