The Lego approach to scientific publishing

In this interview with EuroScientist, Lawrence Rajendran explains why he created Matters, to change the way we communicate science.

Send us a link

In this interview with EuroScientist, Lawrence Rajendran explains why he created Matters, to change the way we communicate science.

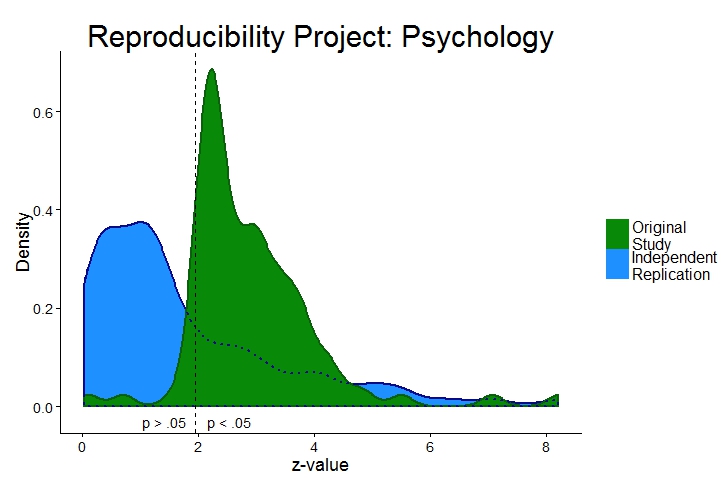

The replicability of psychological research is surprisingly low. Why? In this blog post I present new evidence showing that questionable research practices contributed to failures to replicate psyc…

Dodgy results are fuelling flawed policy decisions and undermining medical advances. They could even make us lose faith in science. New Scientist investigates

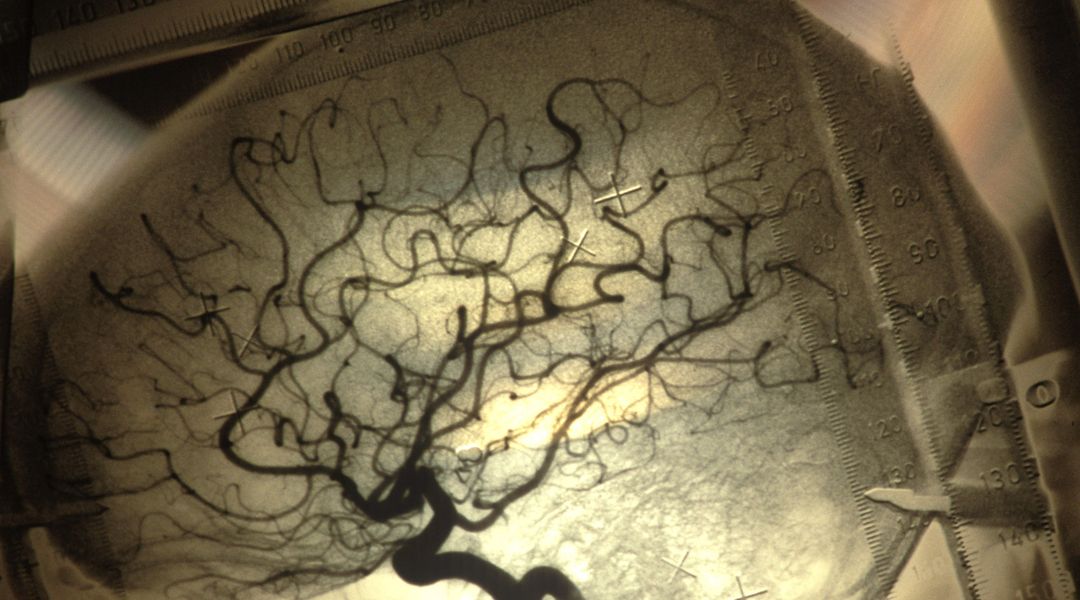

There’s a replication crisis in biomedicine—and no one even knows how deep it runs.

And how to fix them. By Ivan Oransky and Adam Marcus.

Scientific journal policies, physics' head start with arXiv, and differences in the culture of the two disciplines may all play a role.

Two high-profile cases in which universities — who by US law are the ones that must open an investigation into misconduct — stonewalled the effort.

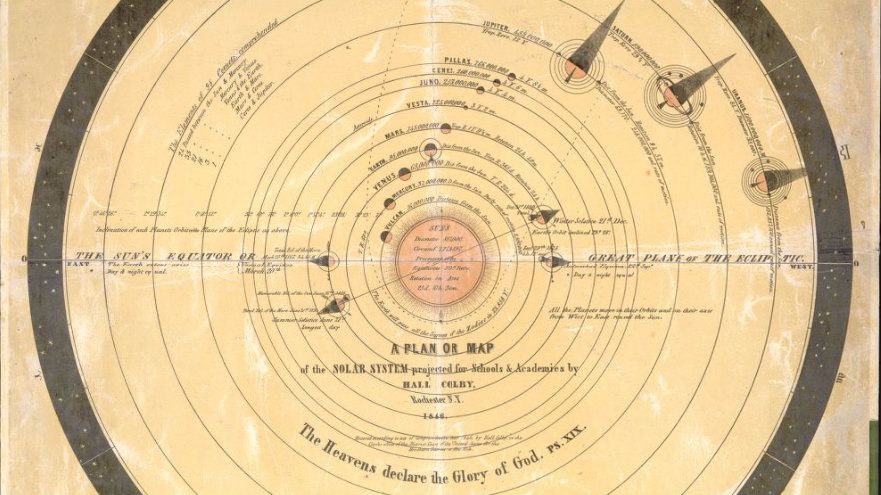

By fetishising mathematical models, economists turned economics into a highly paid pseudoscience

A famous faked study gets proved right—by the people who unmasked it in the first place.

On the democratization of science via the Internet and the dramatic change in the communication of data and in their interpretation.

Some scientists are taking it upon themselves to go beyond their core research areas to study where the scientific system can go wrong.

Perspectives: Structural biologist suggests that chemists can help redefine good research behavior by telling the whole story

The replication crisis is a sign that science is working.

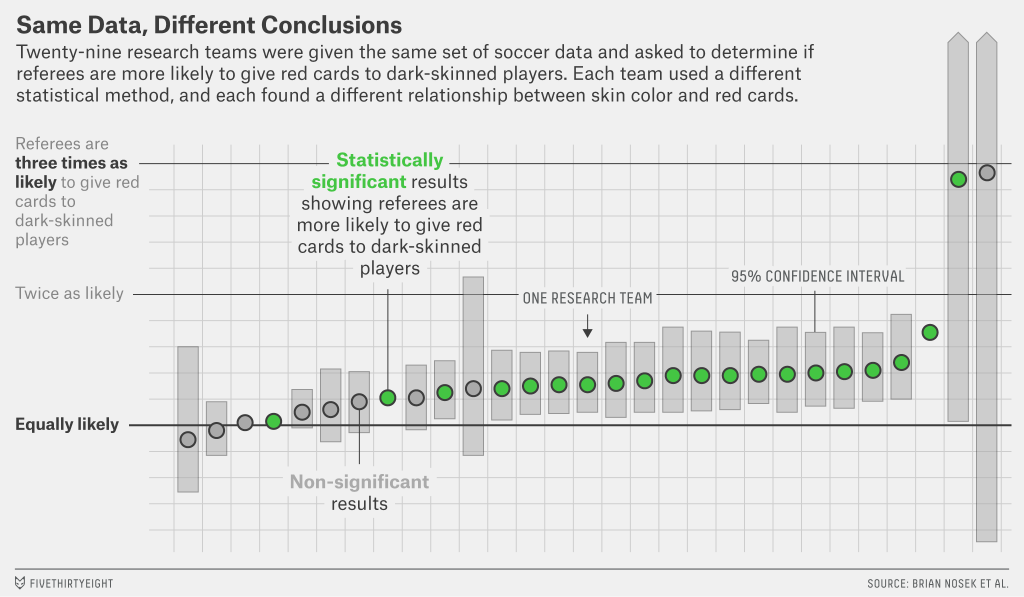

Social science is great at making wacky, wonderful claims about the way the world—and the human mind—works. But a lot of them are wrong.

Amid the so-called replication crisis, could better investigative reporting be the answer? Maybe it’s time for journalists and scientists to work more closely together.

Once again, reproducibility is in the news. Most recently we hear that irreproducibility is irreproducible and thus everything is actually fine...

The field is currently undergoing a painful period of introspection. It will emerge stronger than before.

“Science isn’t about truth and falsity, it’s about reducing uncertainty.”

Combining commercial and academic incentives and resources can improve science, argues Aled Edwards.

Researchers on social media ask at what point replication efforts go from useful to wasteful.

Some researchers think science should be small again.

Scholars in the UK and Australia contemptuous of impact statements and often exaggerate them, study suggests

Jesse Singal argues that the critique by Gilbert et al on the Reproducibility Project isn’t as muscular as it appears at first glance.

An influential psychological theory, borne out in hundreds of experiments, may have just been debunked. How can so many scientists have been so wrong?

Policy statement aims to halt missteps in the quest for certainty: the misuse of the P value is contributing to the number of research findings that cannot be reproduced warns the American Statistical Association.

Is science quite as scientific as it's supposed to be? After years of covering science in the news, Alok Jha began to wonder whether science is as rigorous as it should be.

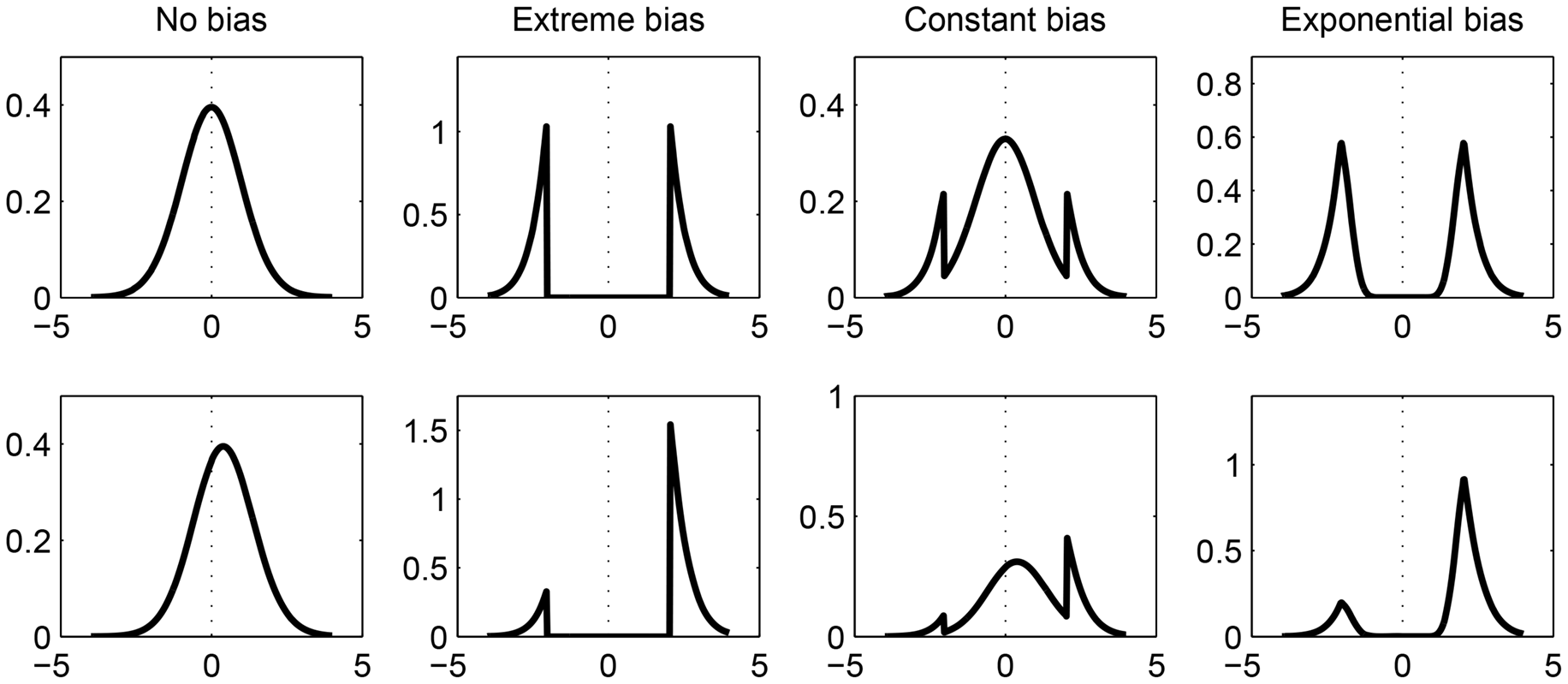

We revisit the results of the recent Reproducibility Project: Psychology by the Open Science Collaboration. We compute Bayes factors—a quantity that can be used to express comparative evidence for an hypothesis but also for the null hypothesis—for a large subset ( N = 72) of the original papers and their corresponding replication attempts. In our computation, we take into account the likely scenario that publication bias had distorted the originally published results. Overall, 75% of studies gave qualitatively similar results in terms of the amount of evidence provided. However, the evidence was often weak (i.e., Bayes factor < 10). The majority of the studies (64%) did not provide strong evidence for either the null or the alternative hypothesis in either the original or the replication, and no replication attempts provided strong evidence in favor of the null. In all cases where the original paper provided strong evidence but the replication did not (15%), the sample size in the replication was smaller than the original. Where the replication provided strong evidence but the original did not (10%), the replication sample size was larger. We conclude that the apparent failure of the Reproducibility Project to replicate many target effects can be adequately explained by overestimation of effect sizes (or overestimation of evidence against the null hypothesis) due to small sample sizes and publication bias in the psychological literature. We further conclude that traditional sample sizes are insufficient and that a more widespread adoption of Bayesian methods is desirable.

Reanalysis of last year's enormous replication study argues that there is no need to be so pessimistic.

Compared with psychology, the replication rate "is rather good," researchers say