Designing a New Type of Journal Metric

At the Researcher to Reader conference, a volunteer project called Project Cupcake was launched to define a new suite of indicators to help researchers judge publishers, rather than the other way around.

Send us a link

At the Researcher to Reader conference, a volunteer project called Project Cupcake was launched to define a new suite of indicators to help researchers judge publishers, rather than the other way around.

Is it reasonable to employ the ResearchGate Score as evidence of scholarly reputation?

ORCID wasn't intended as a massive longitudinal survey of human migration, but with 3 million profiles and growing, it is becoming just that.

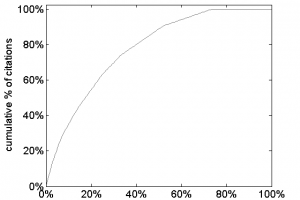

A brief summary of the main citation indicators used today.

ResearchGate and similar services represent a “gamification” of research, drawing on features usually associated with online games, like rewards, rankings and levels.

Remember the Kardashian index? That was Neil Hall's 2014 tongue-in-cheek(ish) dig at science Twitter and "Science Kardashians" - scientists with a high Twitter-follower-to-citation ratio.

Science panels still rely on poor proxies to judge quality and impact.

Altmetrics is a novel method to track and measure the social impact of scientific publications and also the influence of a researcher.

Initiative for Open Citations makes citation data free for all

The Initiative for Open Citations (I4OC) is a collaboration between scholarly publishers, researchers, and other interested parties to promote the unrestricted availability of scholarly citation data.

A coalition of scholarly publishers, researchers, and nonprofit organizations launched the Initiative for Open Citations (I4OC), a project to promote the unrestricted open access to scholarly citation data.

A review showing that some metrics in widespread use cannot be used as reliable indicators research quality.

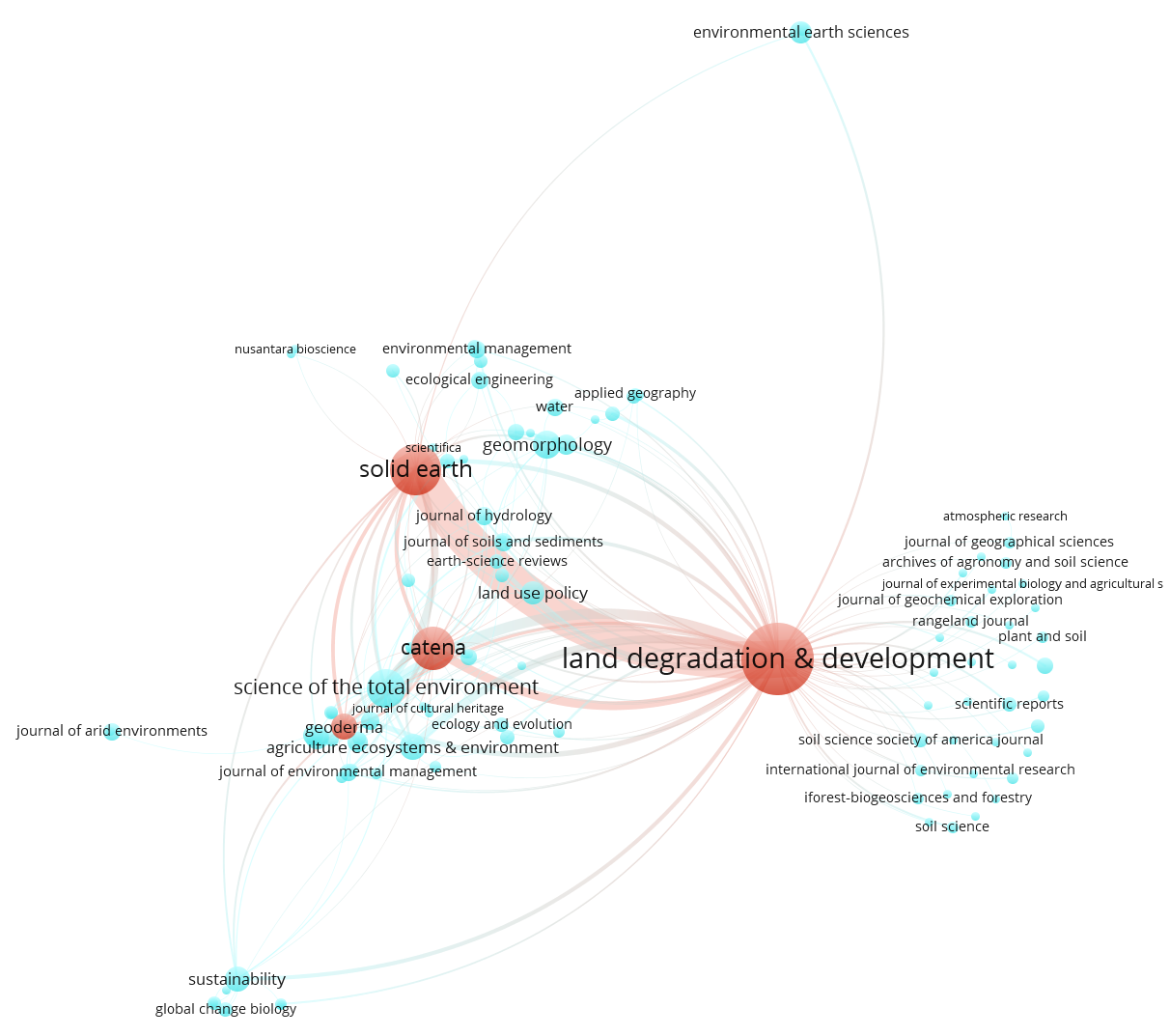

Citation cartels are groups of researchers and journals that team up with the specific intent of affecting the number of citations their publications receive.

Responsible metrics and evaluation for open science.

It’s not just about distributing credit where it’s due

Many bibliometricians and university administrators remain wary of Google Scholar citation data, preferring “the gold standard” of Web of Science instead.

Allow me to pull back the curtain. Scientist #1 is writing a paper and wants to add a reference in the introduction.

How much can a single editor distort the citation record? Investigation documents rogue editor's coercion of authors to cite his journal, papers.

Without any doubt, the journal impact factor (IF) is one of the most debated scientometric indicators. Especially the use of the IF for assessing individual articles and their authors is highly controversial.

Slides from my AAAS '17 talk, part of the panel: "Mind the Gaps: Wikipedia as a Tool to Connect Scientists and the Public"

While the University Grants Commission’s system prioritizes peer-reviewed papers, experts not involved in the initiative express concern that it could incentivize cheating.